What does SWE-bench Verified actually measure?

It is one of the best tests of AI coding, but limited by its focus on simple bug fixes in familiar repositories

SWE-bench Verified is a set of 500 issues and patches from real python repositories. Each benchmark sample contains:

The repository before the PR was merged

The issue description(s)

For evaluation it also contains:

The original, human-written solution

A set of tests

The model is put in the position of a maintainer: given the issue description, it has to patch the repository to resolve the issue and make tests pass. This is much more realistic than assessing models on LeetCode-style tasks.

We think a relatively low fraction (~5-10%) of SWE-bench Verified’s issues are incorrect and unsolvable. The issues are all from live codebases Human annotators filtered the issues from a larger subset, SWE-bench. We manually analyzed 40 issues

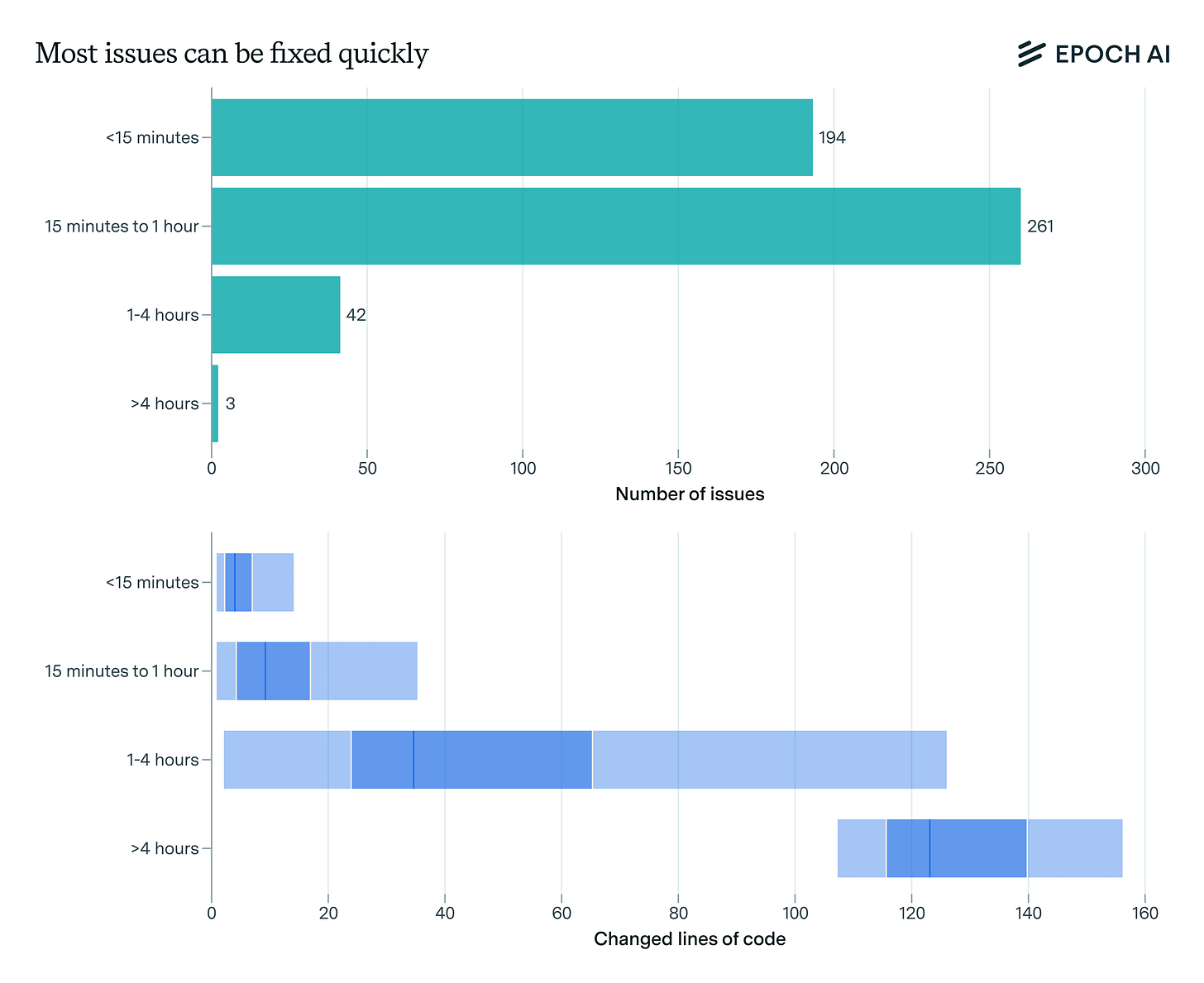

People sometimes equate SWE-bench Verified scores with general software engineering ability. Instead, SWE-bench Verified predicts whether an AI can fix simple issues (taking at most a couple hours for an SWE to solve) in a codebase.

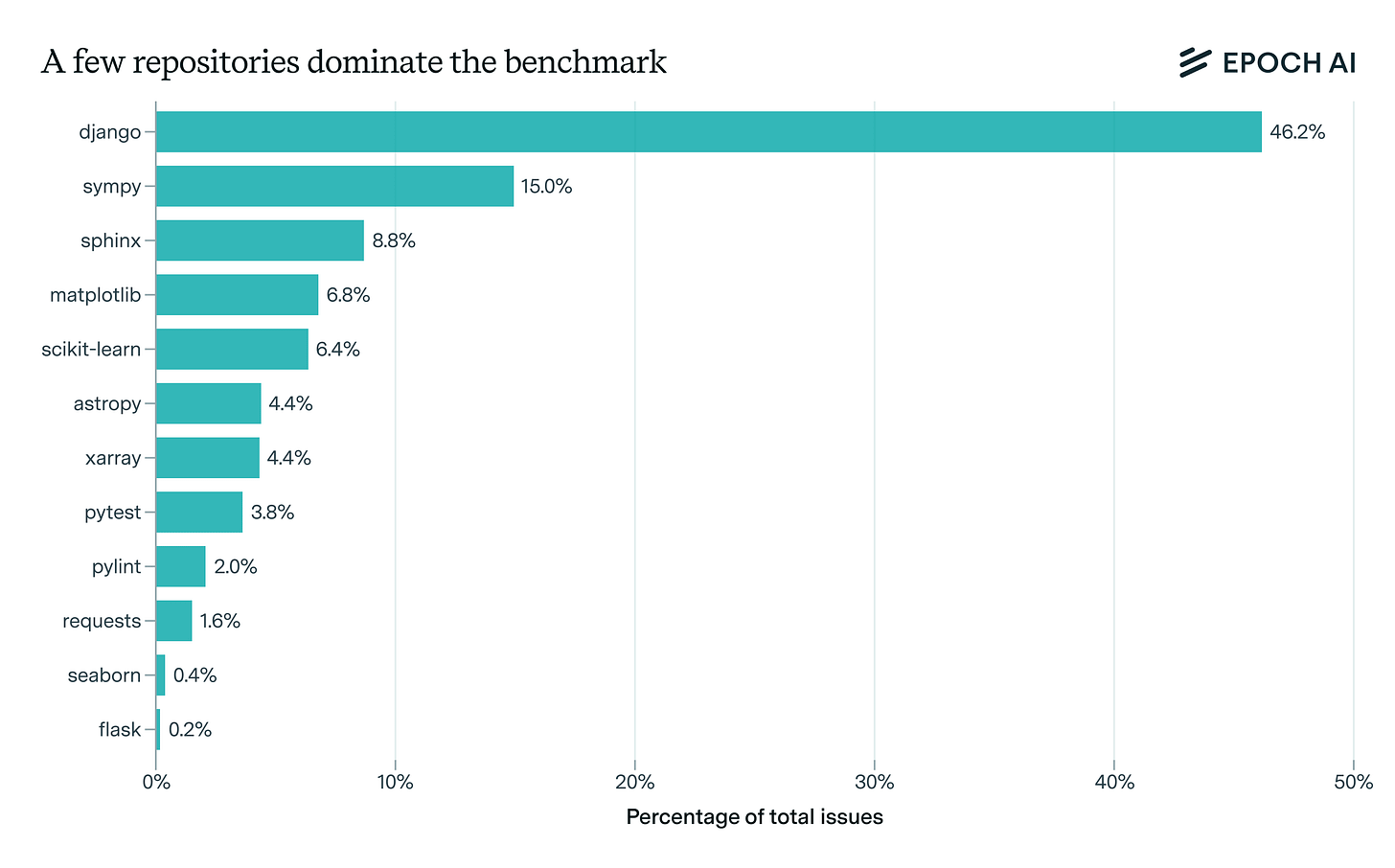

Furthermore, the low diversity of codebases limits external validity. Django comprises nearly half of all issues and five repositories account for over 80% of the benchmark.

Contamination is a major concern for SWE-bench Verified: since all the issues are sourced from famous repositories, and date back from 2023 and earlier, it’s likely that current models have been trained on these repositories.

Recently, many benchmarks have been developed that build on SWE-bench Verified’s foundation, such as SWE-bench Multimodal, SWE-bench Multilingual, Multi-SWE-bench, and Terminal-Bench. We may review them in future issues.

Read the full report on our website.