Notes on GPT-5 training compute

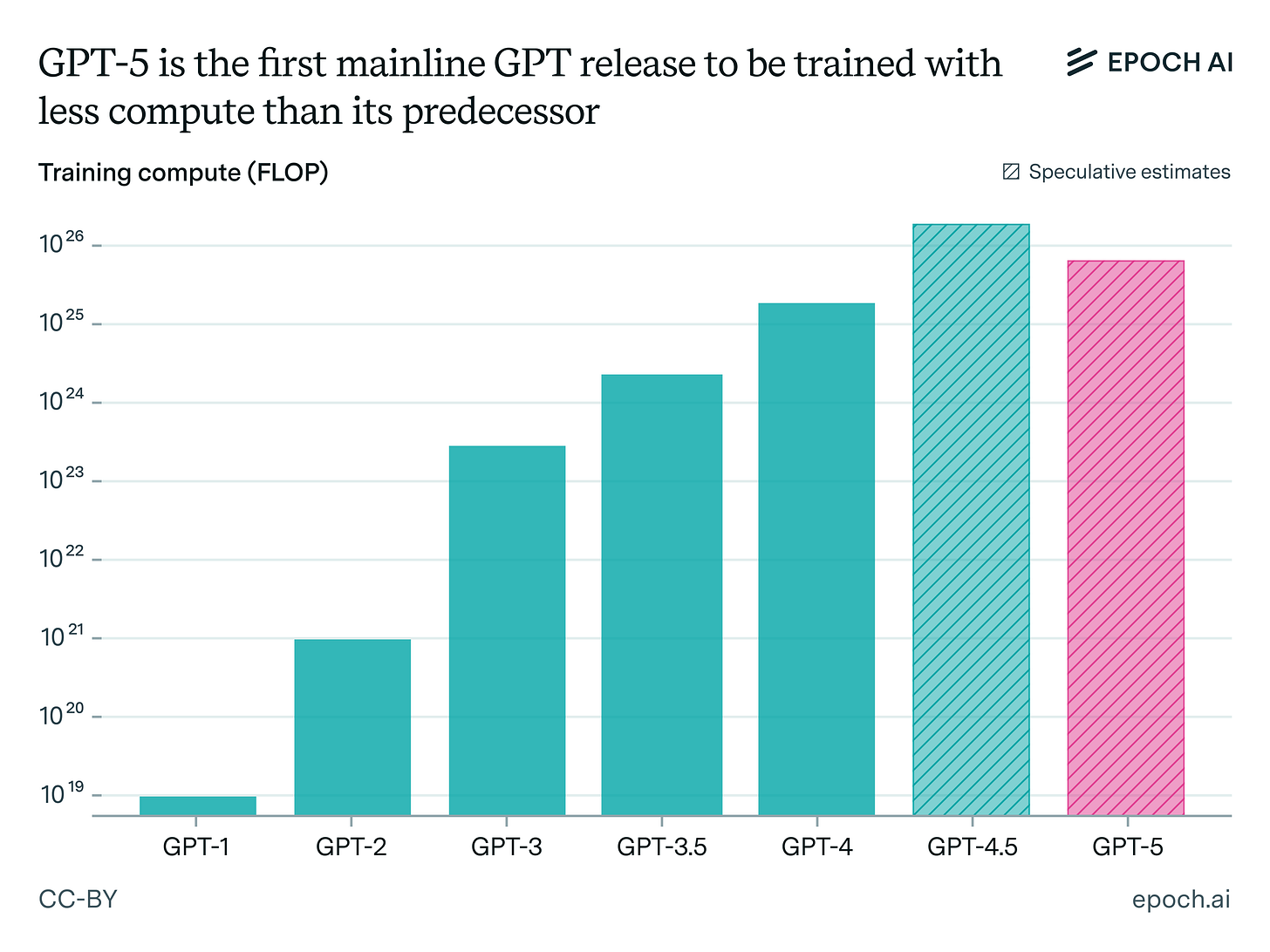

We recently wrote that GPT-5 is likely to be trained on less compute than its predecessor. How did we reach this conclusion, and what do we actually know about how GPT-5 was trained?

Our best guess: GPT-5 was trained on ~5e25 FLOP total, including both pre-training and reinforcement learning. That would be more than twice as much as GPT-4 (~2e25 FLOP), but less than GPT-4.5 (>1e26 FLOP).

Here’s how it breaks down:

Pre-training

Training compute scales in proportion to a model’s active parameters, as well as training data. Based on price, speed, and prevailing industry trends, GPT-5 is probably a “mid-sized” frontier model with ~100B active params, akin to Grok 2 (115B active), GPT-4o, and Claude Sonnet. GPT-5’s pre-train token count is unconfirmed, but Llama 4 and Qwen3’s were 30-40 trillion tokens.

OpenAI has invested heavily into pre-training, so GPT-5 was likely trained on at least 30T tokens, possibly several times more. This gives a median of ~3e25 FLOP pretrain.

Post-training

Next is reinforcement learning during post-training, which adds more uncertainty. In early 2025, RL compute was small—maybe 1-10% of pre-training. But this is scaling up fast: OpenAI scaled RL by 10× from o1 to o3, and xAI did the same from Grok 3 to 4. Did OpenAI scale RL to match or exceed pre-training compute for GPT-5? It’s possible. But reports suggest that training GPT-5 wasn’t straightforward, and OpenAI may have focused on different skills for GPT-5 than for o3, suggesting more experimentation vs simple scaling.

Overall, if final RL training made up 10 to 200% of pre-training, that yields a median estimate of 5e25 FLOP for GPT-5’s overall training compute. And it’s likely that GPT-5 was trained on less than 1e26 FLOP.

RL scaling remains a key factor in near-term AI progress. Recent evidence about this compute frontier is sparse, but we’re tracking it closely.

See here for detailed notes on our estimate. And for why OpenAI trained GPT-5 with relatively little compute, see our full Gradient Update.