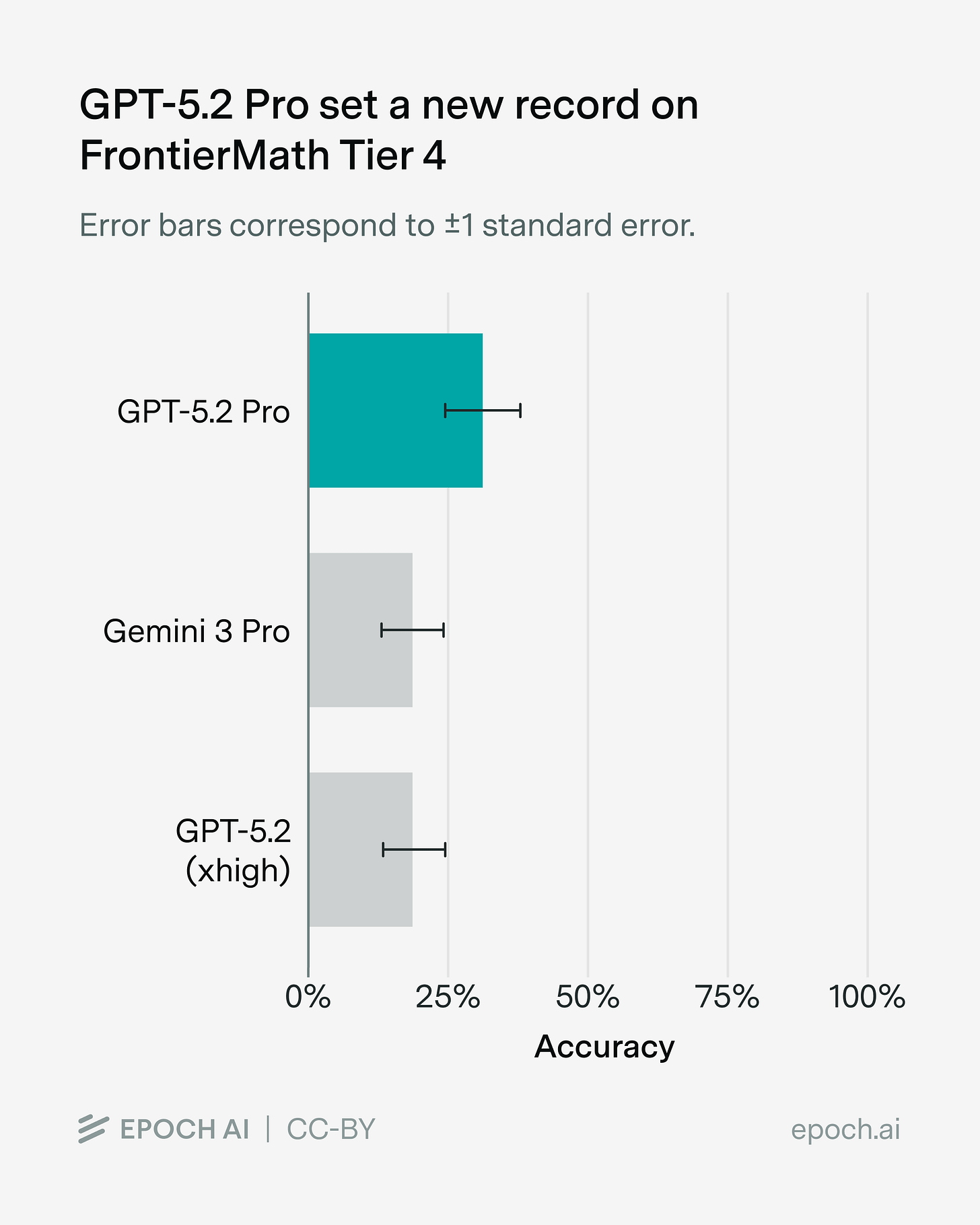

New record on FrontierMath Tier 4

GPT-5.2 Pro (manual run) scored 31%, a substantial jump over the previous high score of 19%.

We evaluated GPT-5.2 Pro manually on the ChatGPT website. We did this after encountering timeout issues with the API in our scaffold. We’re working to resolve these issues, but a manual evaluation seemed worthwhile in the meantime.

Prior to this run, 13 problems from Tier 4 had been solved by any model ever. GPT-5.2 Pro solved 11 of those, and 4 more besides. Its total for this run is thus 15/48 (31%), and the pass@the-kitchen-sink for Tier 4 (all problems solved ever) is now 17/48 (35%).

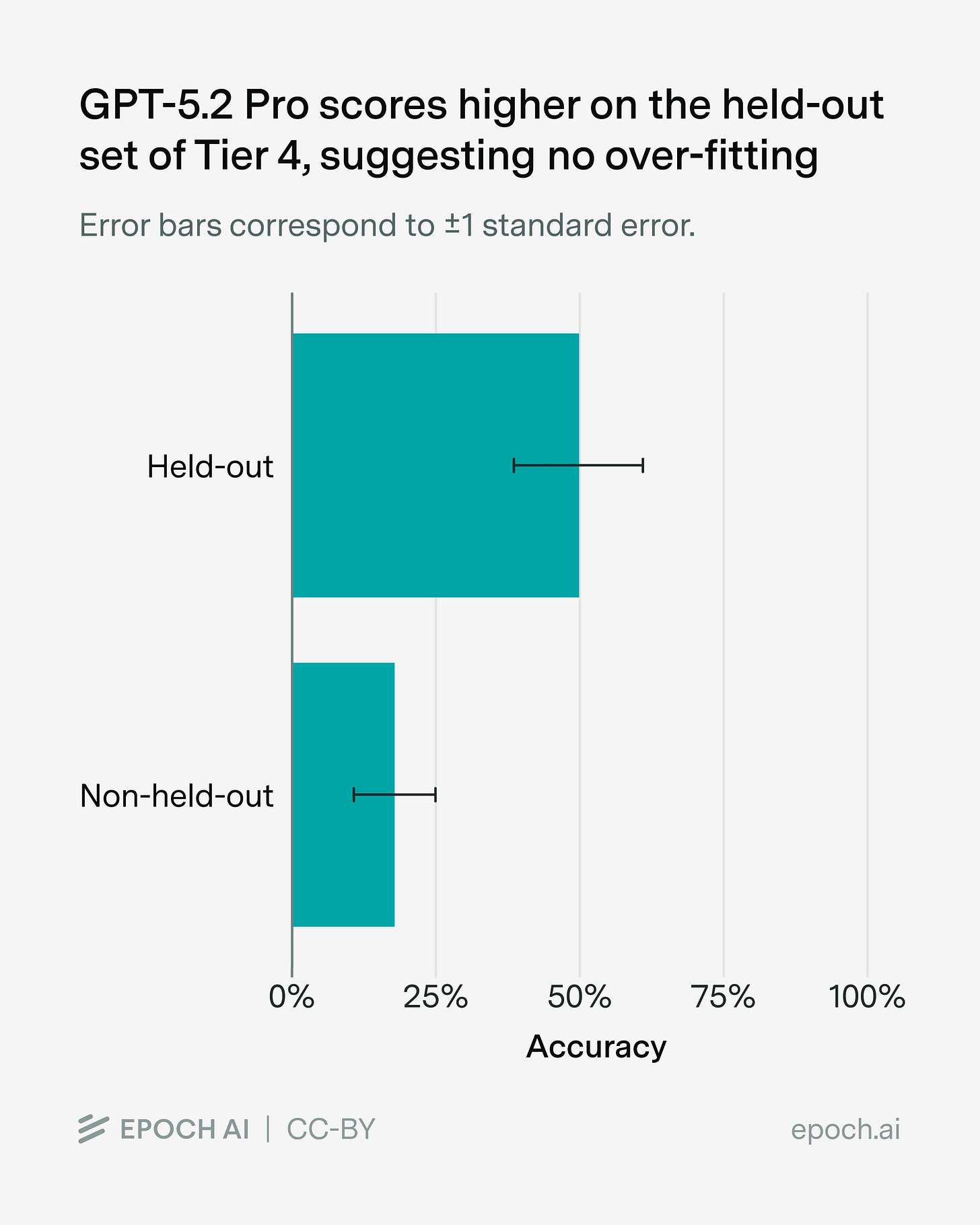

OpenAI has exclusive access to 28 Tier 4 problems and their solutions, with Epoch holding out the other 20 problems. GPT-5.2 Pro solved 5 (18%) of the non-held-out set and 10 (50%) of the held-out set. In other words: no evidence of over-fitting.

During evaluation we found issues with two problems. GPT-5.2 Pro and GPT-5.2 (high) should have been credited with solving both, and GPT-5.2 (xhigh), (medium), and GPT-5 Pro should have been credited with solving one. We’ve fixed the issues and updated the scores on our hub.

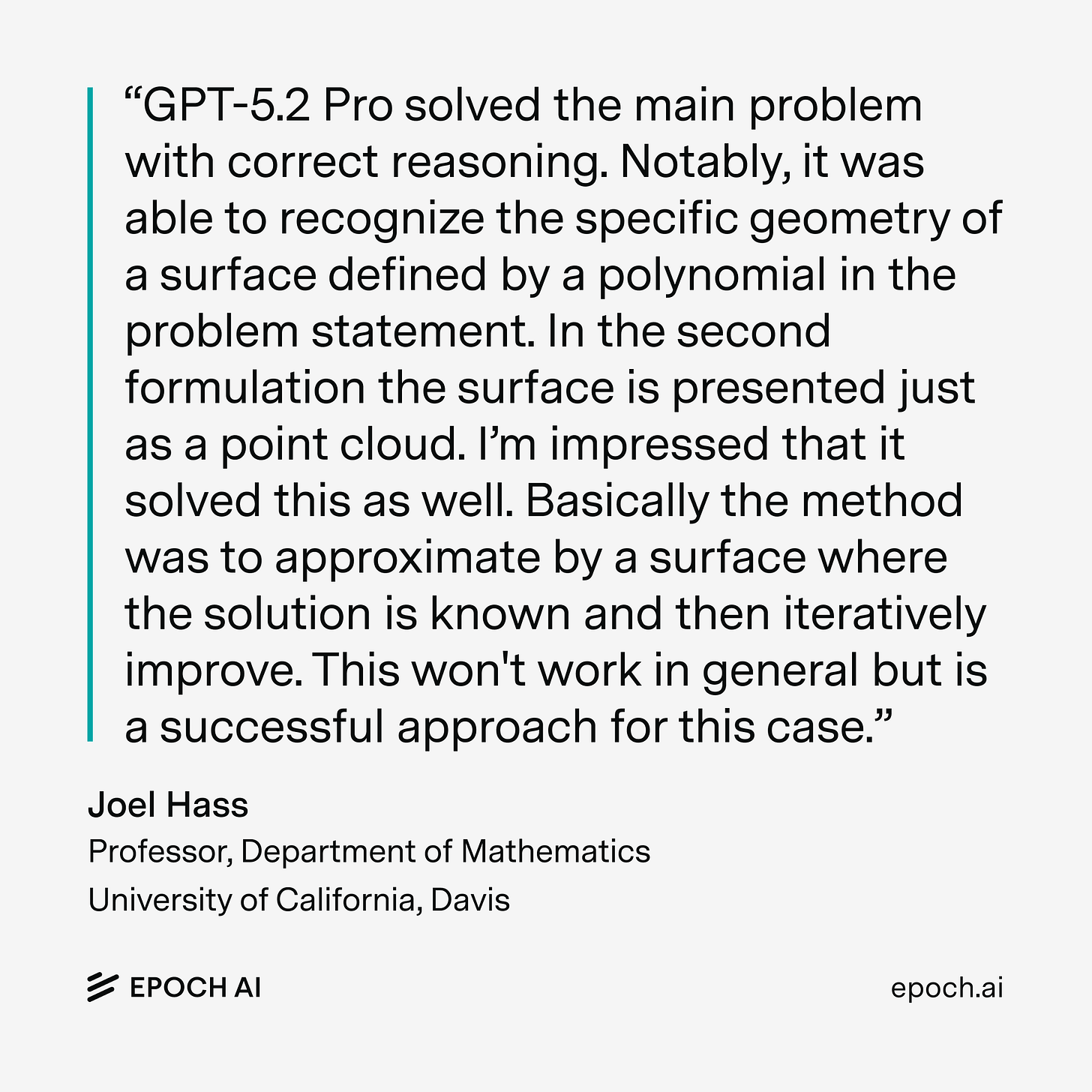

One of the newly-solved problems was from Joel Hass, whose research is in low-dimensional topology and geometry. Afterward, he suggested we try a different, more challenging formulation of the same problem. GPT-5.2 Pro solved that too.

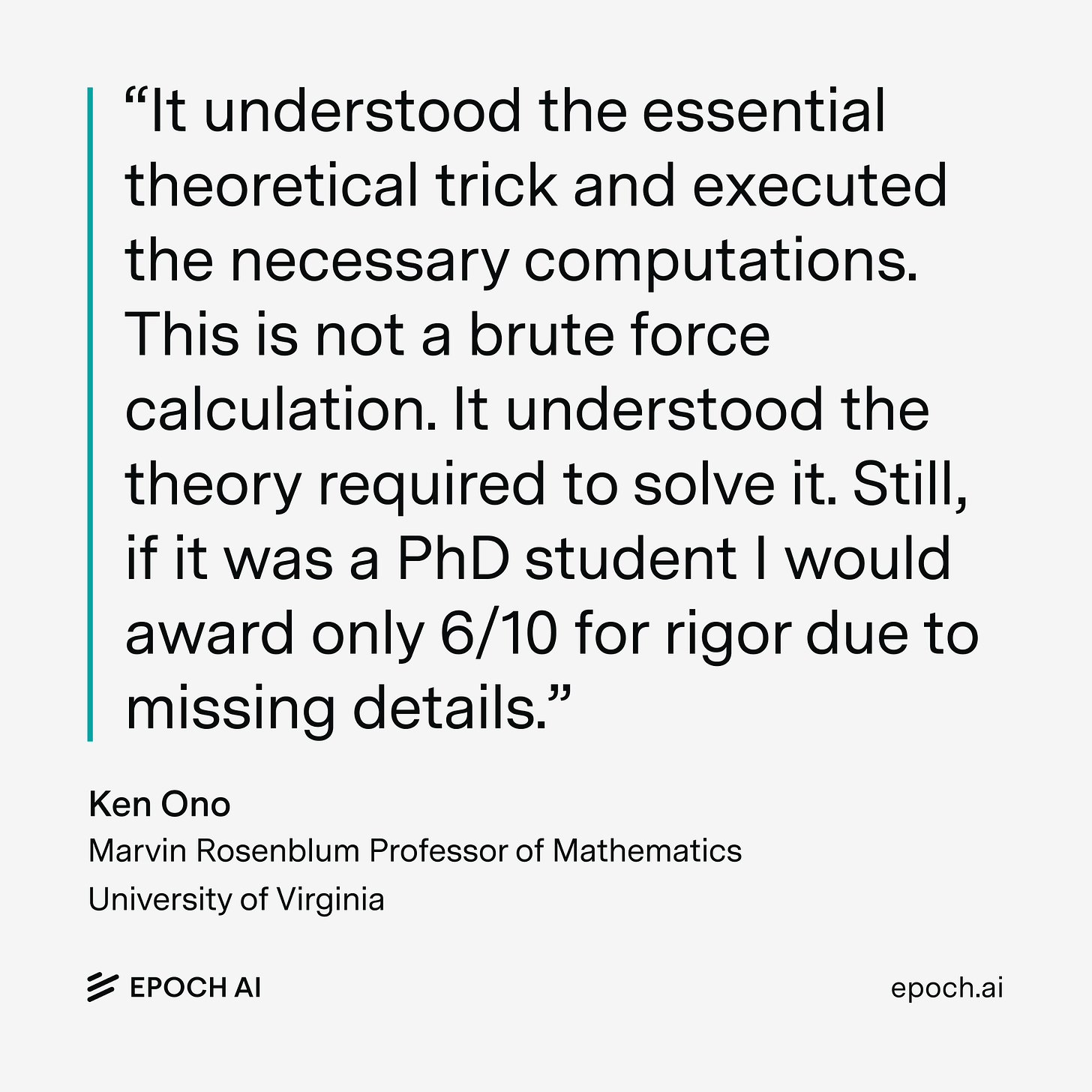

Another problem, by number theorist Ken Ono was initially solved by GPT-5.2 (xhigh), and also by GPT-5.2 Pro. Ken gave the solution a generally favorable review, though noted that the rigor of its prose explanation was somewhat lacking.

Another newly-solved problem was by number theorist Dan Romik. He was impressed.

A pair of problems by Jay Pantone, who works in analytic combinatorics, were solved earlier: one by GPT-5 and one by GPT-5.1. GPT-5.2 Pro solved these as well. The solutions were both valid, but Jay noted that both used numerical shortcuts that he didn’t intend.

What remains unsolved? One author thinks models get his problem wrong because they make a plausible assumption without trying to prove it. If they tried to prove it — as he had to, when he encountered the problem in his own research — they might realize the truth is more subtle.

Check our website for more info on FrontierMath and analysis of AI math capabilities!

Impressive stuff seeing this 12 percentage point jump in a single iteration. The held-out set performance really stands out tho, models typically struggle more on unseen problem types but here it actualy went the other way. I ran into a similiar thing when benchmarking solvers last year, the numerical shortcuts that Pantone mentioned are kinda inevitable without explictly constraining proof methods.