Grok 4 Training Resource Footprint

What it takes: energy, emissions, water & cost to train a frontier AI

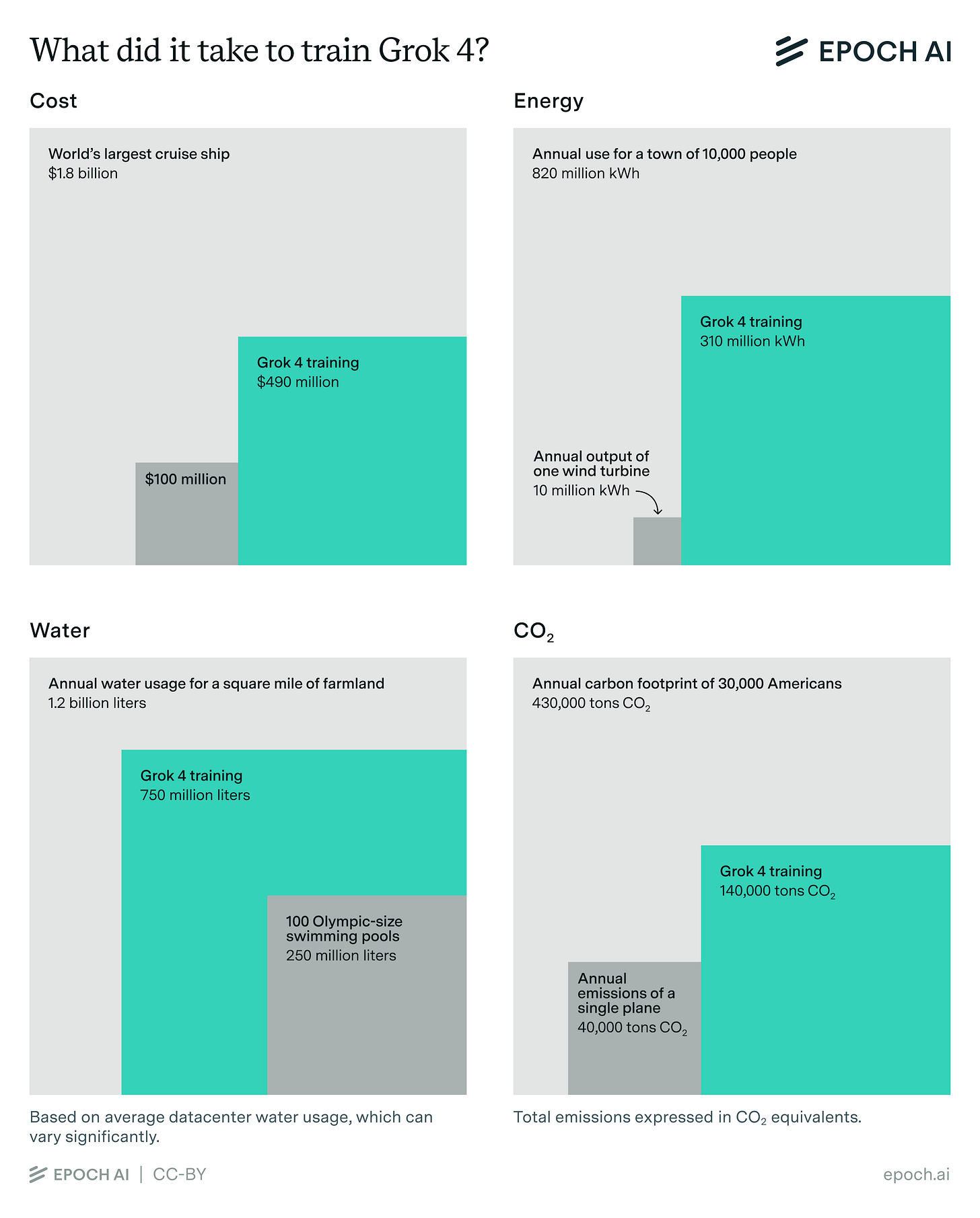

What does it take to train cutting-edge AI? We looked into the numbers for Grok 4, which required the largest training run to date.

Training Grok 4 required a lot of electricity, enough to power a small town of 4,000 Americans for a year.

Most of this power came from natural gas, generating emissions equal to a Boeing jet over three years of use.

To support this much power, the supercomputer used to train Grok 4 had to be cooled with the equivalent of 300 Olympic-sized swimming pools.

Needless to say, training Grok 4 was a very expensive project. The costs of energy and running computer chips alone add up to $500 million.

And that’s only looking at the costs for training. Serving the model to users, performing experiments to develop the techniques used to train it, and human labor are all significant additional costs.

This Data Insight was written by James Sanders, Yafah Edelman and Luke Emberson. You can learn more about the analysis on our website.

I really appreciate that you put the numbers in perspective. The water consumption seems modest compared to one square mile of farmland, and the CO2 emissions not so large compared to a single plane. It really highlights that we have other technologies besides AI that are resource-intensive and that require our attention for a sustainable future.

And Grok 4 scores terrible in the Gödel's Therapy Room eval. Grok 3 scored higher. 😆