Gemini 2.5 Deep Think on FrontierMath

We evaluated Gemini 2.5 Deep Think manually on FrontierMath as there is no API. The results: a new record!

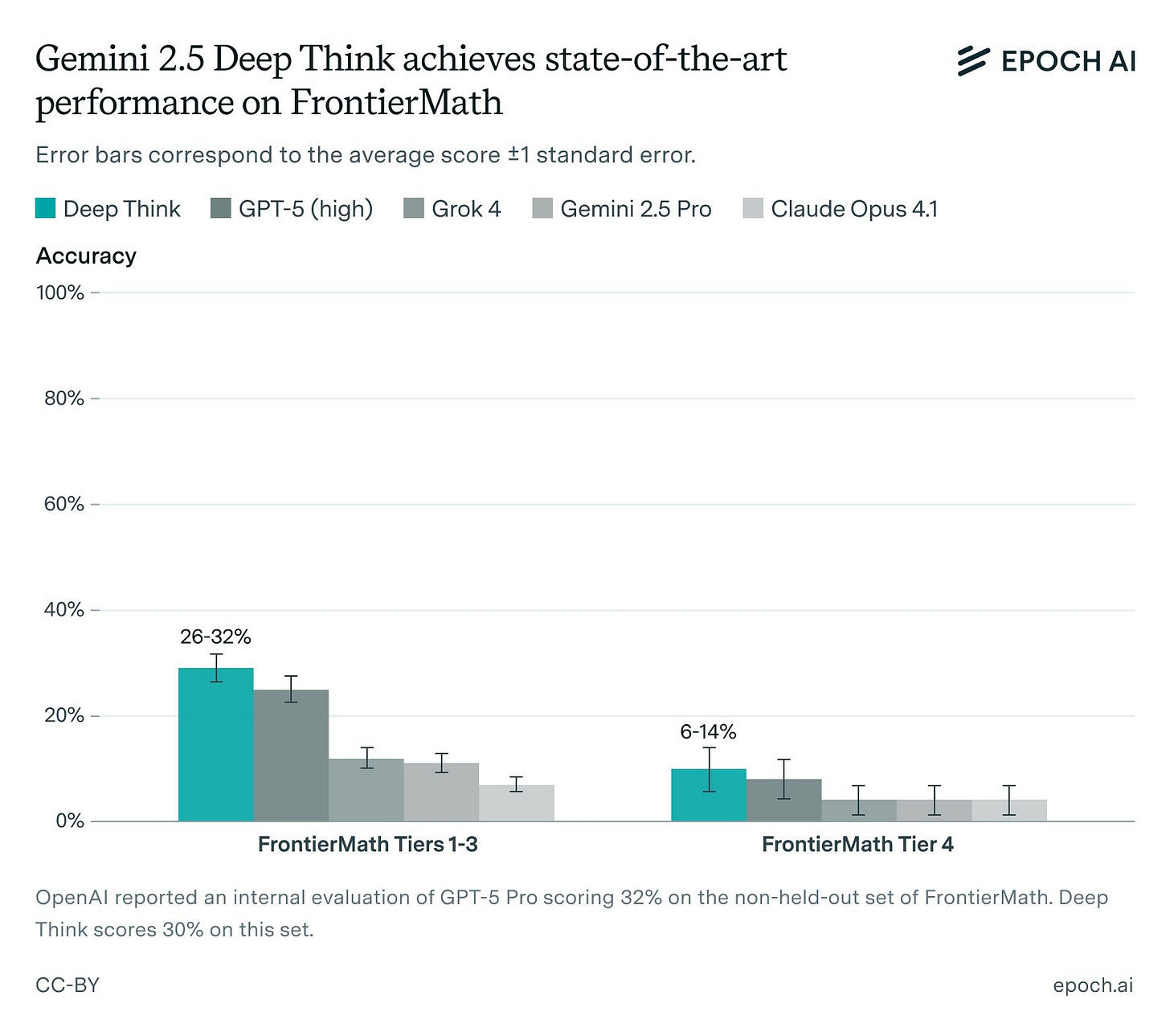

Deep Think scored 29% on FrontierMath Tiers 1–3 and 10% on Tier 4, compared to the prior records of 25% and 8% respectively, held by GPT-5 (high).

Note that this is the publicly available version of Deep Think, not the version that achieved a gold medal-equivalent score on the IMO. Google has described the publicly available Deep Think model as a “variation” of the IMO gold model.

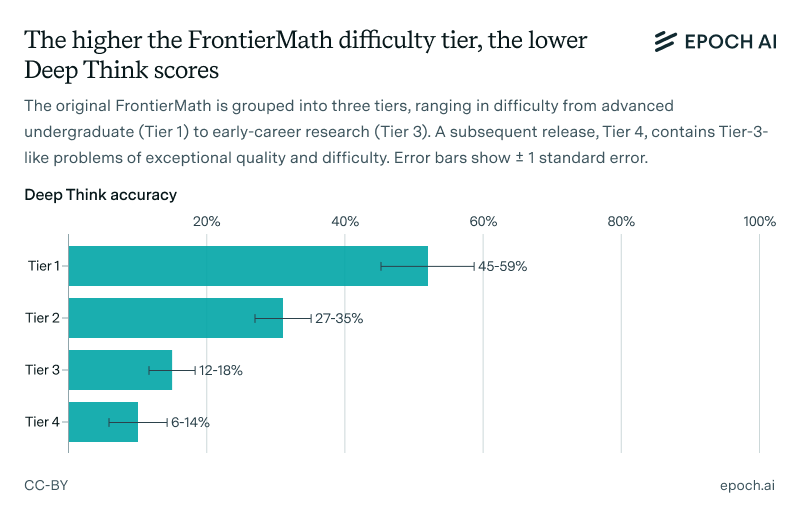

Good performance on FrontierMath requires deep background knowledge and precise execution of computations. Deep Think has made progress but hasn’t yet mastered these skills, still scoring lower on the harder tiers of the benchmark.

This version of Deep Think got a bronze medal-equivalent score on the 2025 IMO. We challenged it with two problems from the 2024 IMO that are a bit harder than the hardest problem it solved on the 2025 IMO. It failed to solve either problem even when given ten attempts.

Deep Think approaches geometry problems differently than other LLMs: rather than casting everything in coordinate systems, it works with higher-level concepts. This is how humans prefer to solve geometry problems as well.

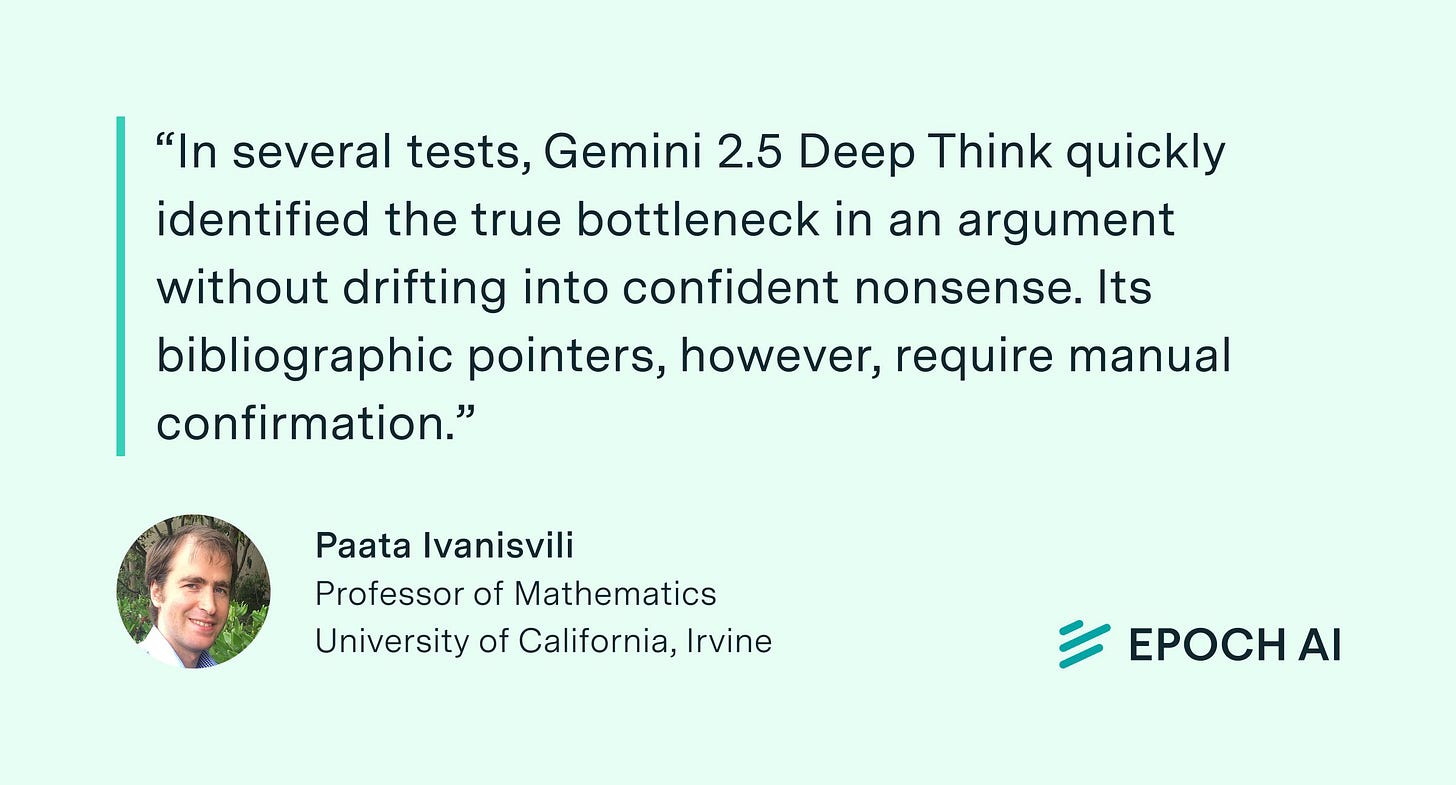

We noticed Deep Think making several bibliographic errors, referencing works that either did not exist or did not contain the claimed results. Anecdotally, this was the model’s main weakness compared to other leading models.

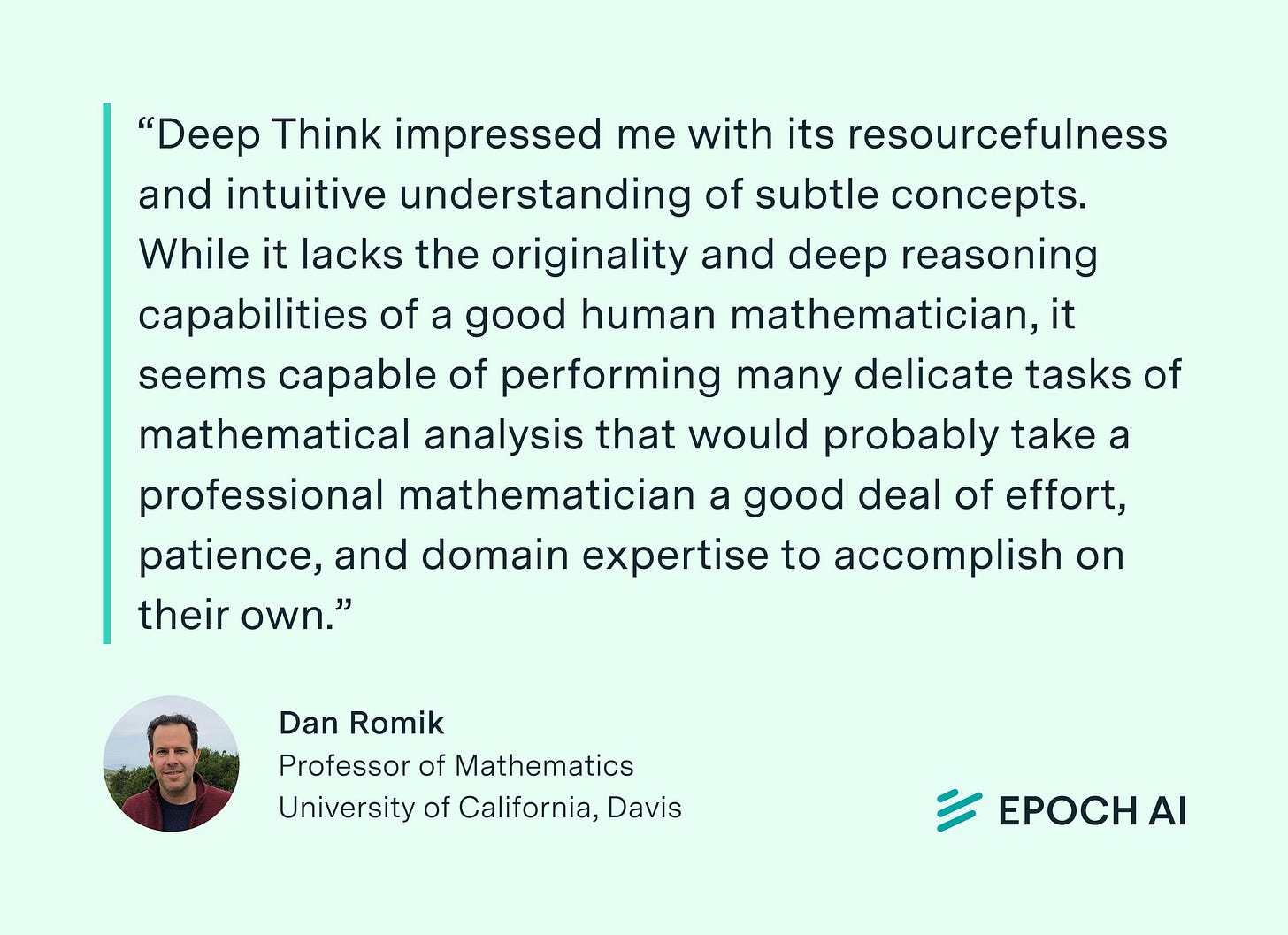

We also worked with some professional mathematicians to get their anecdotal impressions. They characterized Deep Think as a broadly helpful research assistant.